'AI slop' is flooding the Internet. This is how can you tell if an image is artificially generated

Fake images generated with artificial Intelligence (AI) are rapidly swarming social media, flooding feeds with a new type of spam: useless "AI slop" designed to farm clicks in the form of interactions.

Although the first generative AI (GenAI) programmes were released in 2022, reports suggest that over 15 billion AI-generated images are already circulating online.

OpenAI's latest data shows that users create more than 2 million images daily with DALL-E 2, its image-generation model amounting to approximately 916 million images in just 15 months from a single programme.

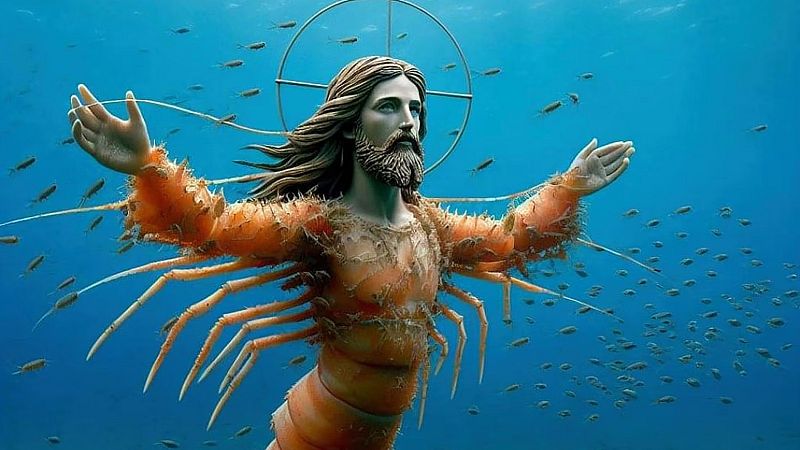

Much of this content is absurd and unrealistic, with users generating impossible images: a figure of Jesus made of shrimp, anthropomorphic animals, and gigantic titans destroying famous landmarks, to name just a few.

But the images are improving in quality, becoming more and more realistic as AI technology continues to develop at breakneck speed.

In 2024, Republican political activist Amy Kramer reshared a hyper-realistic AI image of a girl holding a puppy in the aftermath of Hurricane Helene, using it as "evidence" to criticise then-president Joe Biden.

And more recently, a French woman was conned out of €830,000 by a scammer using AI-enhanced photos of Brad Pitt.

Though realistic, these images aren’t infallible, and there’s more than one way to tell which content is artificially generated.

The devil is in the details, especially with humans

One of the most common giveaways is found in the details of the human form.

For example, AI struggles notoriously with hands, often producing fingers that are too long, too short, deformed, or oddly numbered, as many drawings end up with four, six, and seven fingers.

It can also create unnatural proportions in limbs or facial asymmetries that seem slightly off. Eyes (another frequent problem area) can appear misaligned, feature inconsistent reflections, or look lifeless despite the overall realism of the image.

Beware distorted text

Another telltale sign is distorted or unreadable text. AI models have difficulty replicating coherent words and often produce gibberish instead.

Signs, billboards, and product labels in AI-generated images frequently contain nonsensical or scrambled lettering. Such was the case with the infamous Willy Wonka Experience in Glasgow, whose AI-generated posters featured text like "encherining" and "cartchy tuns" (presumably "enchanting" and "catchy tunes").

Misaligned shadows and reflections

Lighting and shadows also help identify artificiality. Natural photographs always follow the predictable behaviour of light, but AI-generated images may display reflections and shadows that don’t align with the light source, which gives them an eerie, uncanny quality.

Many images feature lighting that is too uniform, making them appear almost plastic or overly polished compared to real-world photography and giving them a "Disney" appearance.

Beyond visual inconsistencies, AI-generated images often lack the natural imperfections found in real photographs.

Unnaturally flawless

Digital noise - a common element in real low-light photography - is either completely absent or appears in a strangely uniform, artificial manner.

AI likes to smooth out textures too much, making skin, surfaces, and fabric appear unnaturally flawless. And in some cases, AI-generated portraits look airbrushed to an extreme degree, stripping away the natural pores and blemishes of real human faces.

Another typical sign of artificially created content is patterns of repetition. This is often because AI struggles to generate truly distinct elements, leading to cloned objects or repeating details that appear subtly altered.

It’s important to pay close attention to elements like crowds, which may feature individuals with nearly identical faces, or background patterns may with blatant repetitions.

Look for context

And even when an AI-generated image appears convincing on the surface, its context can reveal weirdness and discrepancies.

Some classic signs of AI generation are objects that seem out of place, mismatched cultural references, or historical. For example, traffic signs with incorrect colours, reflections that contradict reality, or architectural elements that do not fit the expected style of a given location may all be red flags.

Finally, if visually, the image looks fine, but something is still suspicious, some detection tools help verify authenticity – though, again, these are not infallible.

However, conducting a reverse image search can help determine if the image has appeared elsewhere online before, allowing users to track its origin.

Today